Episodic Control: The Role of Memory in Decision Making

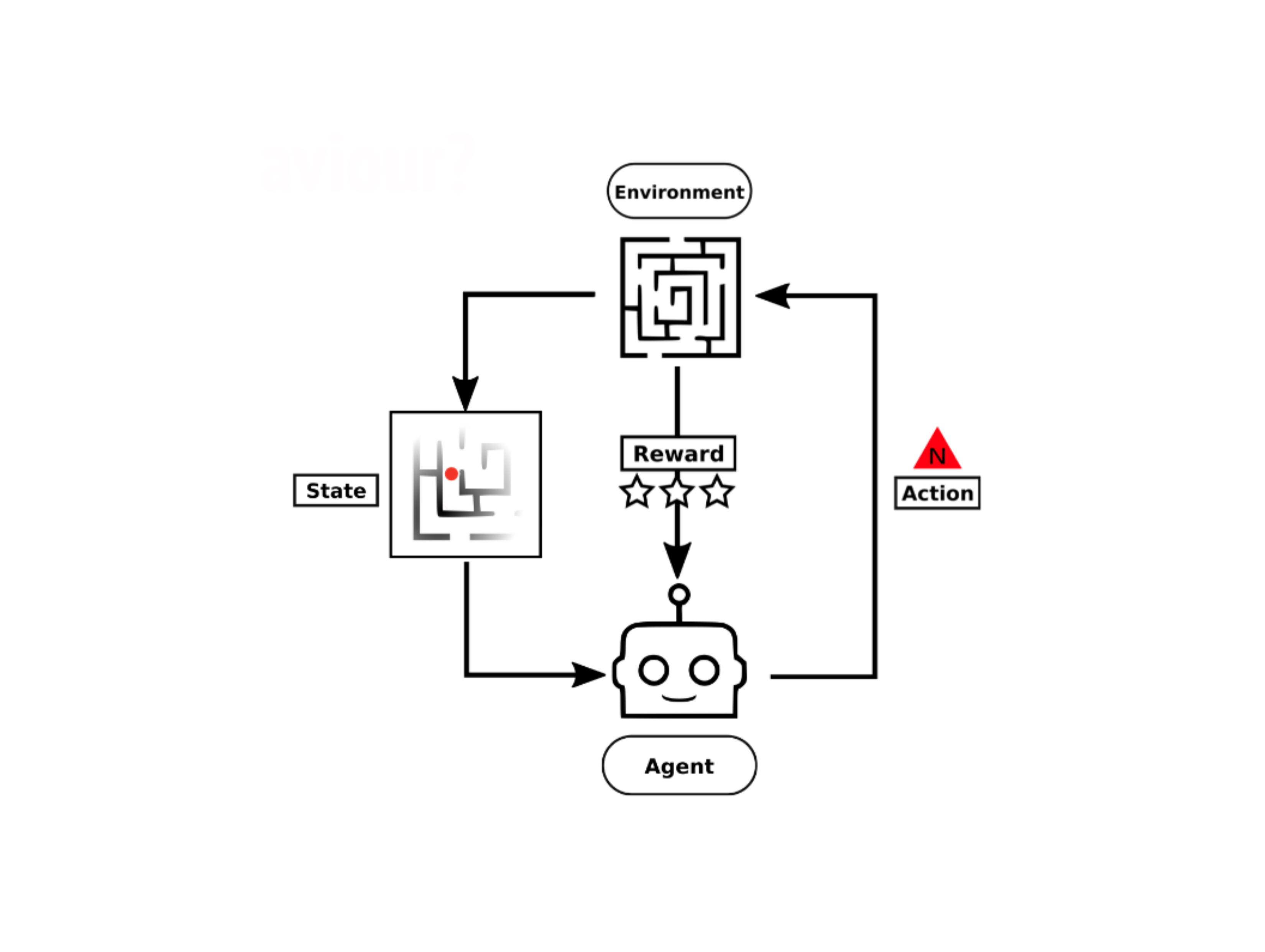

My doctoral research explored how reinforcement learning (RL) could be improved with a control system patterned after autobiographical (episodic) memory in the brain. Traditional RL agents can learn very good behaviour in a variety of environments, but are challenged when the statistics of the world (say, how and when rewards are given) change. Humans and animals are able to learn and re-learn quickly, partly because the brain is store and retrieve memories of specific events ("episodic memory") to learn even with limited experience data. Broadly, I explored the advantages of an RL agent that controlled behaviour using memories of its prior experience over a more traditional RL agent.

Episodic control in reinforcement learning has become a fruitful area of research over the last few years, but many approaches regard memory as a static bank of records and focus on creative ways to store and retrieve entries. I investigated how a more nuanced understanding of the function of episodic memory in biological brains could offer additional advantages in RL tasks. In particular, I looked at how forgetting could actually improve performance by pruning noisy or outdated information. Our paper on forgetful episodic control was recently published as an Open Access article in Frontiers in Computational Neuroscience.